For Organizations

Lumenoid is intended for organizations that build, deploy, or govern AI systems in contexts where human autonomy, accountability, and interpretability must remain intact as systems scale.

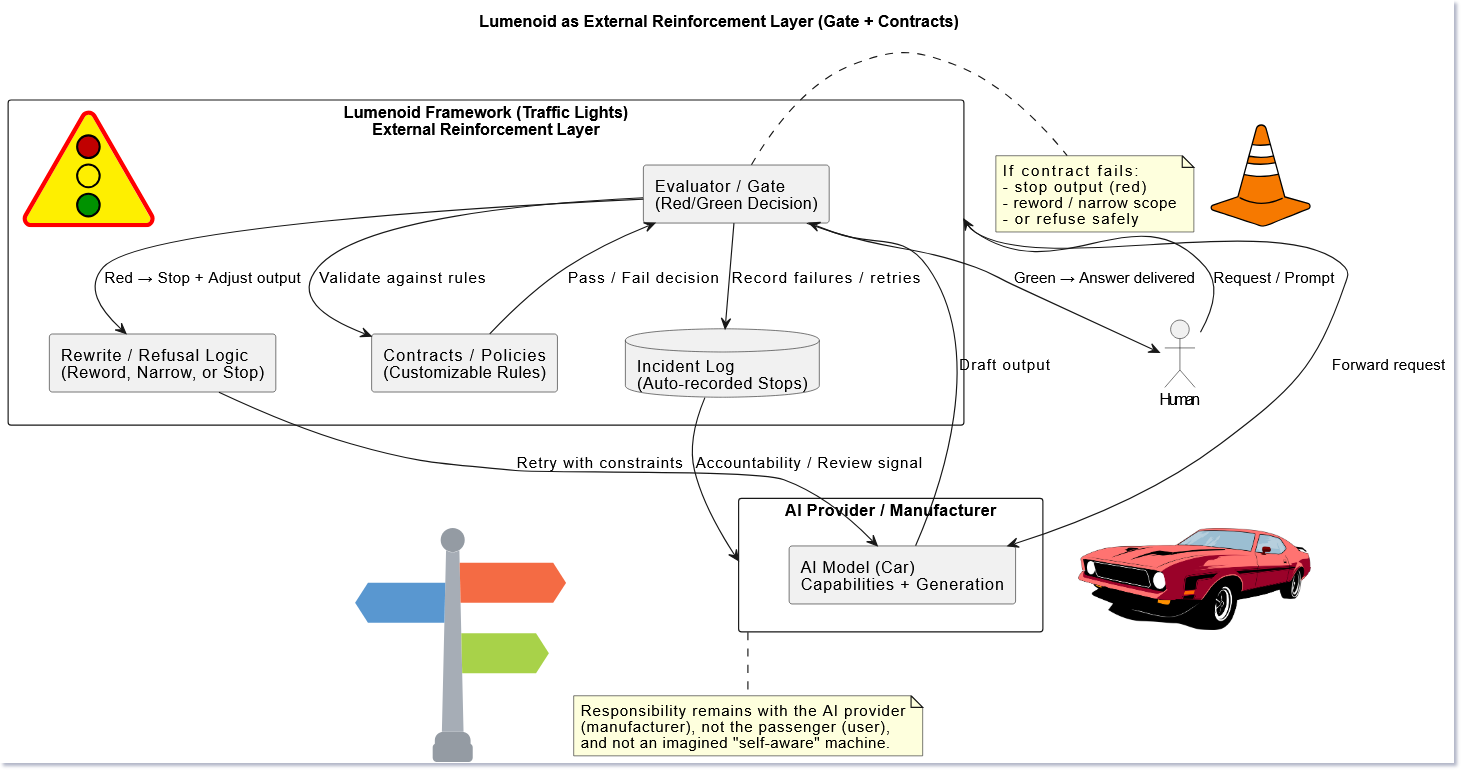

Lumenoid is not a product, service, or compliance tool. It is an ethical and structural framework that organizations may adopt or adapt to govern how AI outputs reach humans.

The framework operates as an external governance layer, applied around an AI system rather than inside it. It constrains behavior through explicit boundaries, validation, and traceability, without prescribing decisions, values, or outcomes.

💠 Why Organizations Adopt Lumenoid

As AI systems grow more capable and confident, responsibility can quietly shift away from human actors and toward automated outputs. Without explicit structure, this shift often goes unnoticed until trust, accountability, or meaning has already eroded.

Organizations typically engage with Lumenoid to:

- Define and document where responsibility begins and ends

- Prevent implied authority or overconfidence in AI outputs

- Make uncertainty visible instead of implicit

- Preserve refusal, scope reduction, and safe exits

- Ensure decisions remain inspectable under scale and automation

Lumenoid addresses these risks by enforcing external invariants: stable constraints related to meaning, uncertainty, and responsibility. These invariants do not optimize or adapt autonomously. They determine whether an output is allowed to pass through the system at all.

💠 Governance Boundary

A key distinction in organizational adoption is between model development and interaction governance.

Model providers remain responsible for training, capability, and internal correctness. Lumenoid governs when and how outputs are allowed to reach humans.

This separation reduces regulatory and institutional volatility. Models remain focused on reasoning and uncertainty signaling, while governance concerns—such as auditability, accountability, and reversibility—are handled externally.

💠 Choosing a Lumenoid Version

Lumenoid versions are ethical baselines, not feature tiers. Choosing a version is about selecting the minimum depth of enforcement required for a given organizational context.

Versions are intentionally frozen to provide stable reference points. Intermediate releases (e.g. v2.1, v2.2) strengthen enforcement without weakening earlier guarantees.

| Version | Status | Core Focus | Primary Structural Guarantee | When to Choose |

|---|---|---|---|---|

| v1 | Frozen · Experimental · Archival | Structural foundation | Non-authoritative containment, semantic validity, and traceable responsibility | Research, early experimentation, or minimal governance contexts where containment and rejection are sufficient and uncertainty is handled externally. |

| v2 | Frozen · Reference (via v2.1+) | Explicit uncertainty & boundary signaling | Uncertainty is surfaced, scope reduction replaces assertion, and responsibility remains human-held. | Organizational use where outputs may be interpreted as factual, authoritative, or representative and uncertainty must be explicit and auditable. |

| v3 | Frozen | Ethical constraints as invariants | Testable ethical boundaries enforced externally without directing outcomes or substituting human judgment. | Regulated or high-stakes environments where authority drift, coercion, or judgment substitution must be structurally blocked. |

| v4 | Frozen | Psychological safety validation | Structural detection and rejection of harmful interaction patterns without profiling, inference, or psychological modeling. | Contexts involving prolonged interaction or vulnerable users where autonomy, self-trust, and psychological safety must be preserved. |

| v5 | Frozen | Context-bound ethical containers | Explicit, opt-in domain containers that narrow scope instead of expanding capability or authority. | Domain-sensitive contexts (e.g. health, creative work, science) where specialization reduces ambiguity and strengthens accountability. |

| v6 | Frozen · Capstone | Pre-interaction stability under saturation | Determination of whether an interaction should occur at all, enforcing terminal outcomes (PROCEED, PAUSE, REFUSE) before model execution. | High-load, ambiguous, or high-pressure environments where the most responsible outcome may be refusal or non-response rather than continuation. |

No version removes protections introduced by earlier versions. Later versions increase constraint, not authority.

💠 Adaptation and Responsibility

Lumenoid is intentionally open to adaptation across legal systems, cultures, and institutional contexts. Organizations may encode local requirements, extend validation rules, or integrate selectively.

Once adapted, responsibility for ethical, legal, and operational compliance rests entirely with the adopting organization.

Responsibility Boundary

- The author maintains the reference framework and its invariants

- Organizations own adaptations and deployments

- Operators remain accountable for real-world use

Lumenoid does not automate judgment or transfer responsibility. It preserves the conditions under which responsibility remains visible, inspectable, and human-held.

← Back to main page